Multimodal agents tutorial: How to use Gemini, Langchain, and LangGraph to build agents for object detection

Matthew Dalida

Technical Account Manager, Google Cloud

May Shin Lyan

Technical Account Manager, Google Cloud

Here’s a common scenario when building AI agents that might feel confusing: How can you use the latest Gemini models and an open-source framework like LangChain and LangGraph to create multimodal agents that can detect objects?

Detecting objects is critically important for use cases from content moderation to multimedia search and retrieval. Langchain provides tools to chain together LLM calls and external data. LangGraph provides a graph structure to build more controlled and complex multiagents apps.

In this post, we’ll show you which decisions you need to make to combine Gemini, LangChain and LangGraph to build multimodal agents that can identify objects. This will provide a foundation for you to start building enterprise use cases like:

-

Content moderation: Advertising policies, movie ratings, brand infringement

-

Object identification: Using different sources of data to verify if an object exist on a map

-

Multimedia search and retrieval: Finding files that contains a specific object

First decision: No-code/low-code, or custom agents?

The first decision enterprises have to decide is: no-code/low-code options or build custom agents? If you are building a simple agent like a customer service chat bot, you can use Google’s Vertex AI Agent Builder to build a simple agent in a few minutes or start from pre-built agents that are available in Google Agentspace Agent Gallery.

But if your use case requires orchestration of multiple agents and integration with custom tooling, you would have to build custom agents which leads to the next question.

Second decision: What agentic framework to use?

It’s hard to keep up with so many agentic frameworks out there releasing new features every week. Top contenders include CrewAI, Autogen, LangGraph and Google’s ADK. Some of them, like ADK and CrewAI, have higher levels of abstraction while others like LangGraph allow higher degree of control.

That’s why in this blog, we center the discussion on building a custom agent using the open-sourced LangChain, LangGraph as an agentic framework, and Gemini 2.0 Flash as the LLM brain.

Code deep dive

This example code identifies an object in an image, in an audio file, and in a video. In this case we will use a dog as the object to be identified. We have different agents (image analysis agent, audio analysis agent, and a video analysis agent) performing different tasks but all working together towards a common goal, object identification.

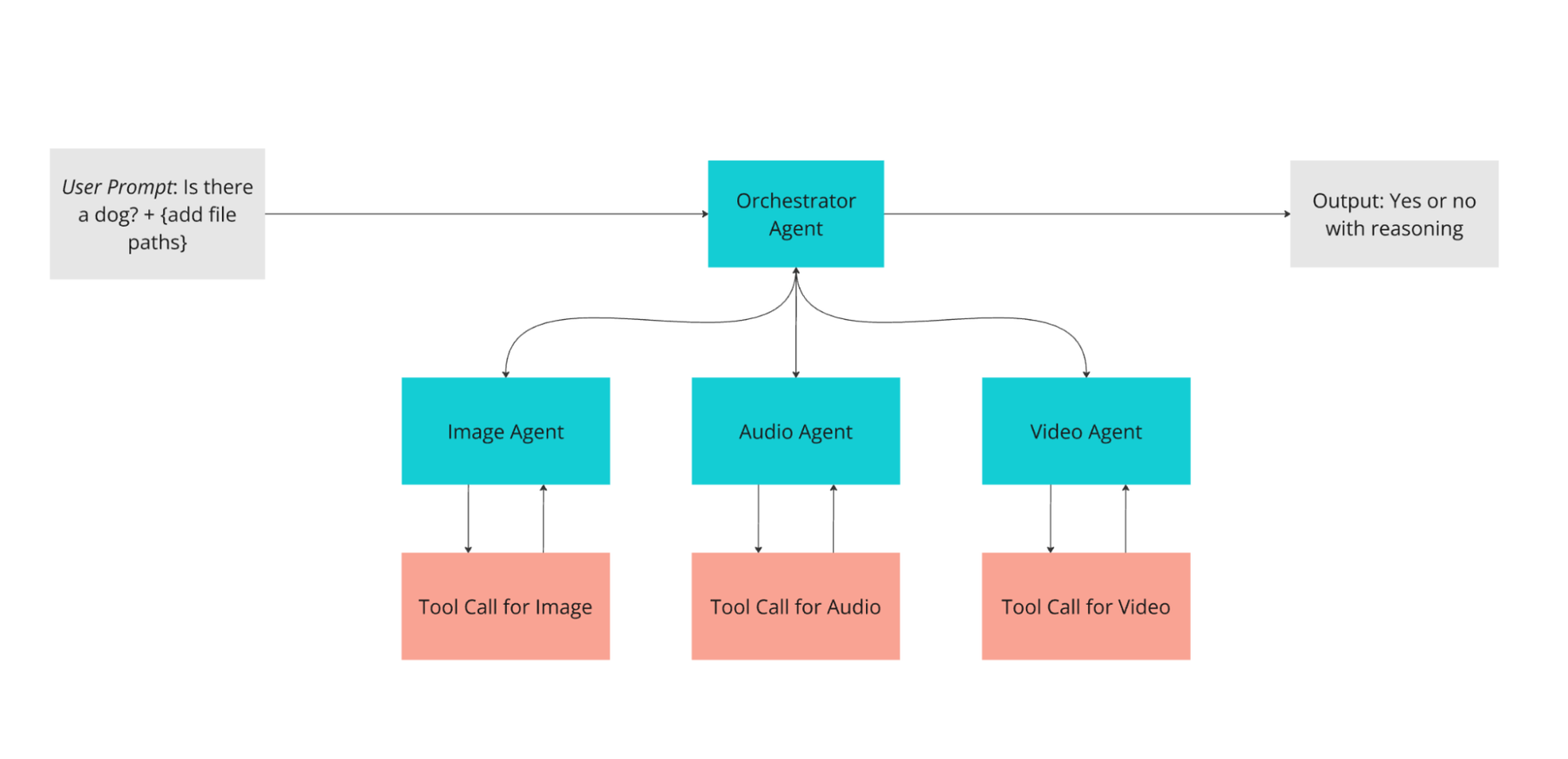

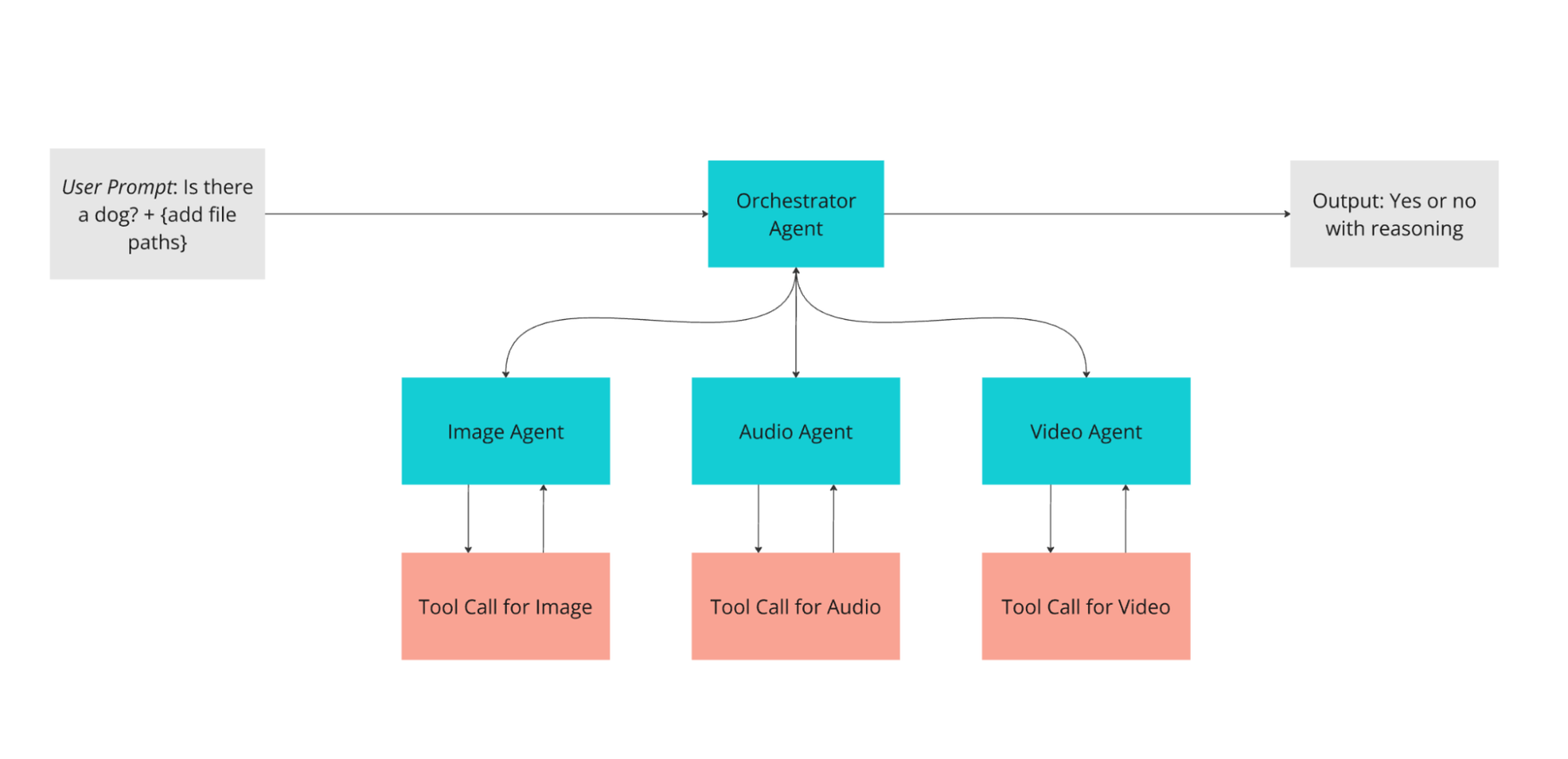

Generative AI workflow for object detection

This gen AI workflow entails a user asking the agent to verify if a specific object exists in the provided files. The Orchestrator Agent will call relevant worker agents: image_agent, audio_agent, and video_agent while passing the user question and the relevant files. Each worker agent will call respective tooling to convert the provided file to base64 encoding. The final finding of each agent is then passed back to the Orchestrator Agent. The Orchestrator Agent then synthesizes the findings and makes the final determination. This code can be used as a starting point template where you need to ask an agent to reason and make a decision or generate conclusions from different sources.

If you want to create multiagent systems with ADK, here is a video production agent built by a Googler which generates video commercials from user prompts and utilizes Veo for video content generation, Lyria for composing music, and Google Text-to-Speech for narration. This example demonstrates the fact that many ingredients can be used to meet your agentic goals, in this case an AI agent as a production studio. If you want to try ADK, here is an ADK Quickstart to help you kick things off.

Third decision: Where to deploy the agents?

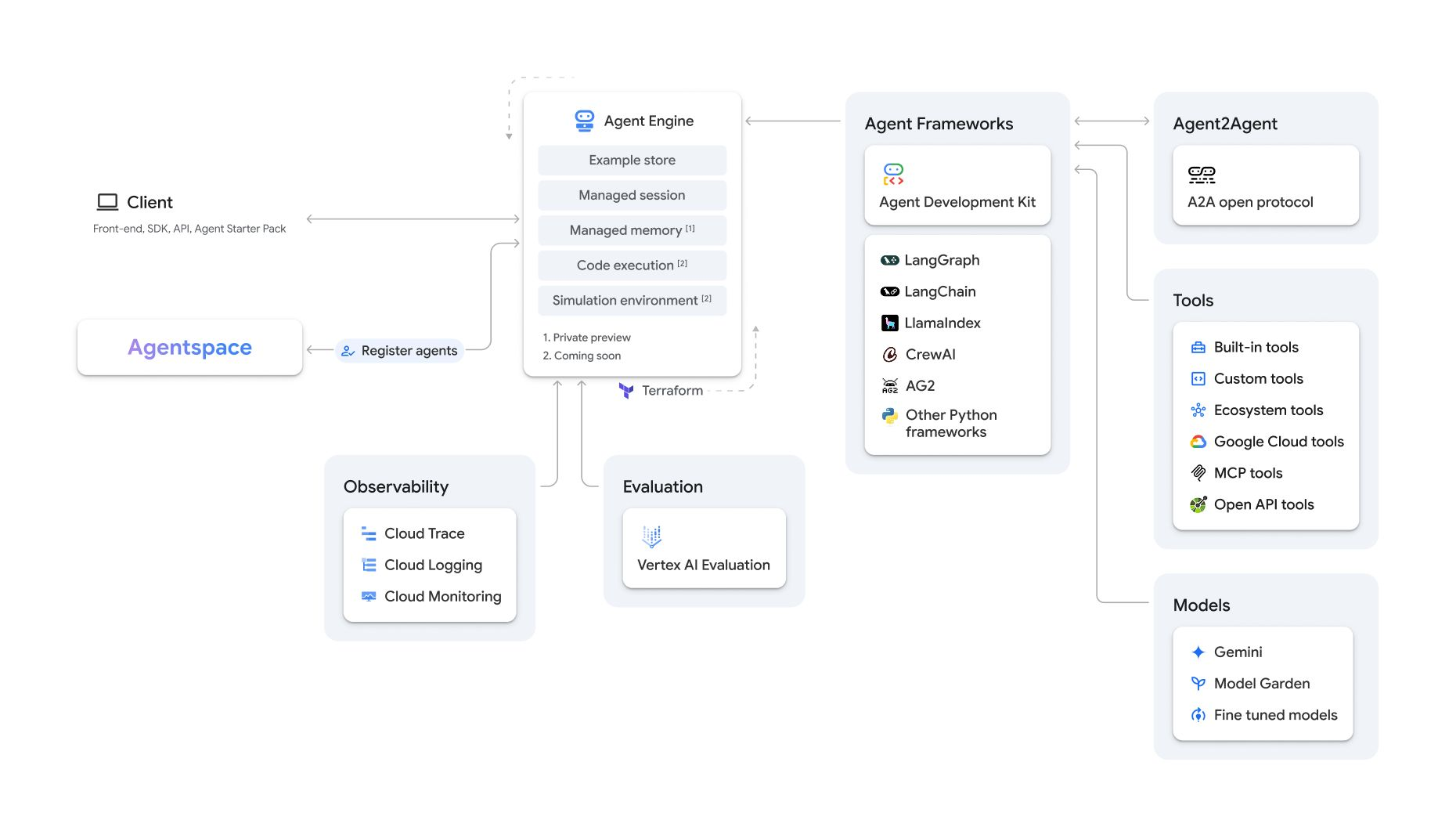

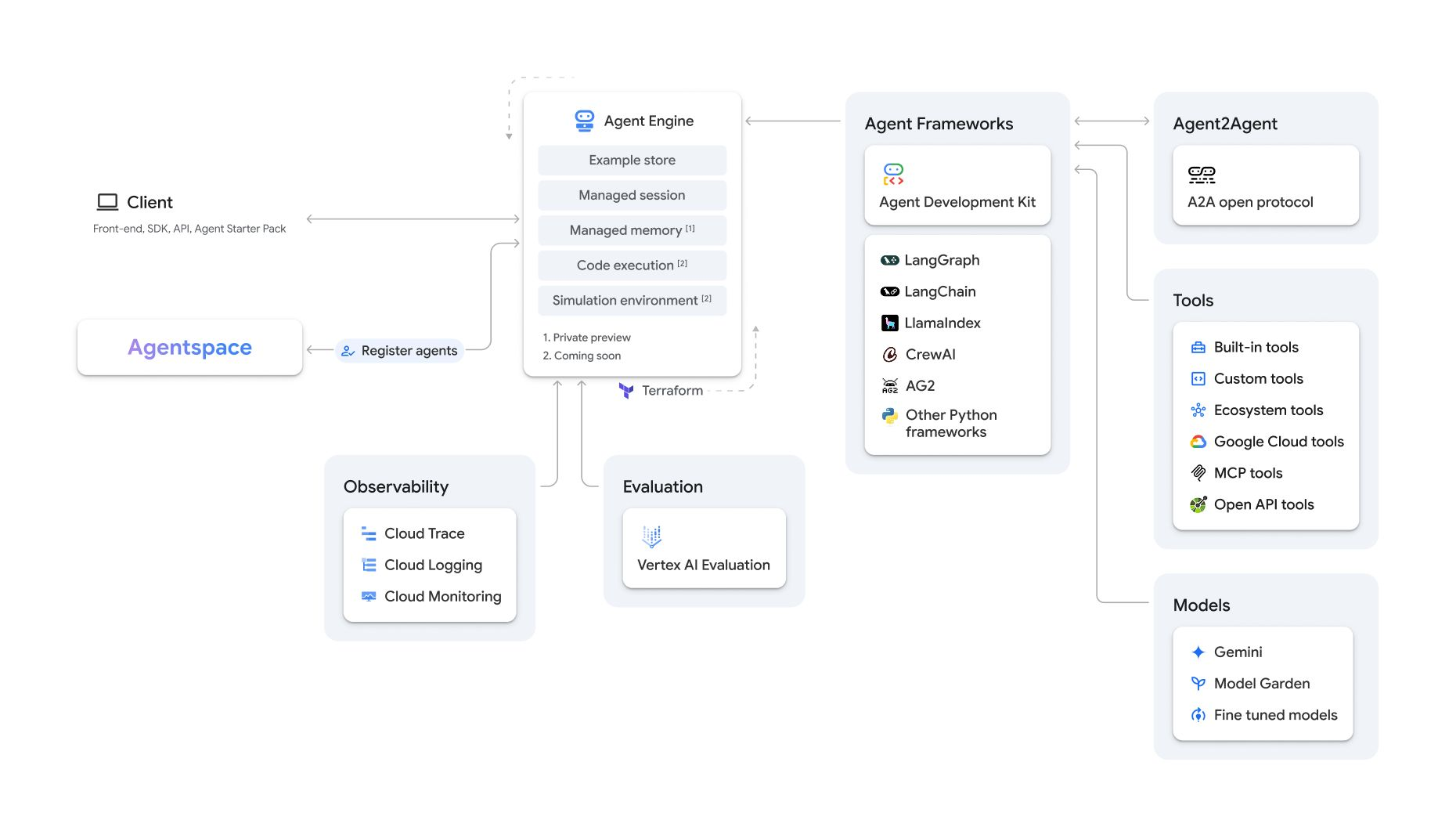

If you are building a simple app that needs to go live quickly, Cloud Run is an easy way to deploy your app. Just like any serverless web app, you can follow the same instructions to deploy on Cloud Run. Watch this video of building AI agents on Cloud Run. However, if you want more enterprise grade managed runtime, quality and evaluation, managing context and monitoring, Agent Engine is the way to go. Here is a quick start for Agent Engine. Agent Engine is a fully managed runtime which you can integrate with many of the previously mentioned frameworks – ADK, LangGraph, Crew.ai, etc (see the image below, from the official Google Cloud Docs).

Get started

Building intelligent agents with generative AI, especially those capable of multimodal understanding, is akin to solving a complex puzzle. Many developers are finding that a prototypical agentic build involves a LangChain agent with Gemini Flash as the LLM. This post explored how to combine the power of Gemini models with open-source frameworks like LangChain and LangGraph. To get started right away, use this ADK Quickstart and or visit our Agent Development GitHub.