How we built an AI basketball coach with Gemini on Vertex AI

Mauricio Ruiz

Creative Lead, Demos & Experiments, Google Cloud

Thomas Cummins

Field Solutions Architect, Gen AI, Google Cloud

What if anyone could take a better shot with a ball, a hoop, and a smartphone?

A pro’s jump shot can be as recognizable as their handwriting – easy to spot, but hard to replicate. So we asked ourselves: How can we use AI and real-time multimodal data (think: live video, sensor data, historical stats) and give players hyper-personalized coaching insights, down to the angle of their knee bend or the elbow position of their shooting hand?

Today at Google I/O, we’re showcasing our AI Basketball Coach, an AI experiment that turns Gemini 2.5 Pro into a jump shot coach. By combining a ring of Pixel cameras with Vertex AI, the coaching system connects AI motion capture, biomechanical analytics, and Gemini-powered coaching via text and voice. We first demoed this experience last month at Google Cloud Next 25, where it was trialed by hundreds of attendees. Visitors entered our half-court, took some jump shots, and AI took it from there.

How the half-court came together for the demo

But more than a fun and unique way to play basketball, it showed how generative AI triumphs in any setting where complex motion must be understood — athletics, manufacturing, retail, even robotics.

How we turned Gemini into a jump shot coach

This isn’t the first time we’ve tried using AI to help coach athletes. Last year, we set out to elevate your soccer game by leveraging Gemini and Imagen 2 on Vertex AI to analyze a player’s performance in real-time. At Pebble Beach, we created an AI swing coach to help people improve their swing.

These demos set a high bar, but they also taught two lessons:

- Gemini delivers its most accurate feedback when it combines raw video with ML-extracted motion data — skeletal landmarks, ball trajectory, and shot outcome.

- You should “train” your coach on accurate, domain-specific data — sourced directly from professional coaches — to ensure it learns from the best.

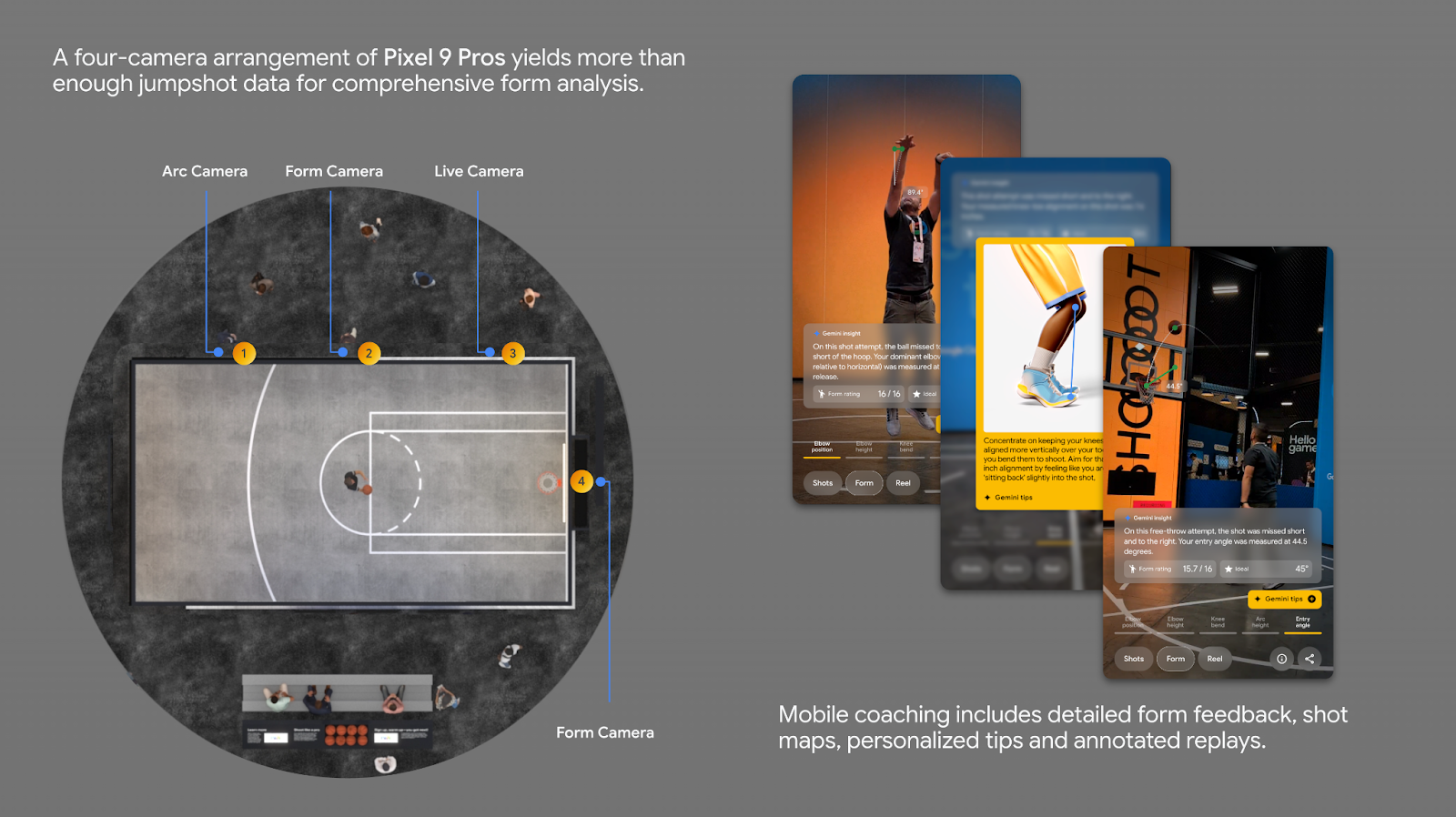

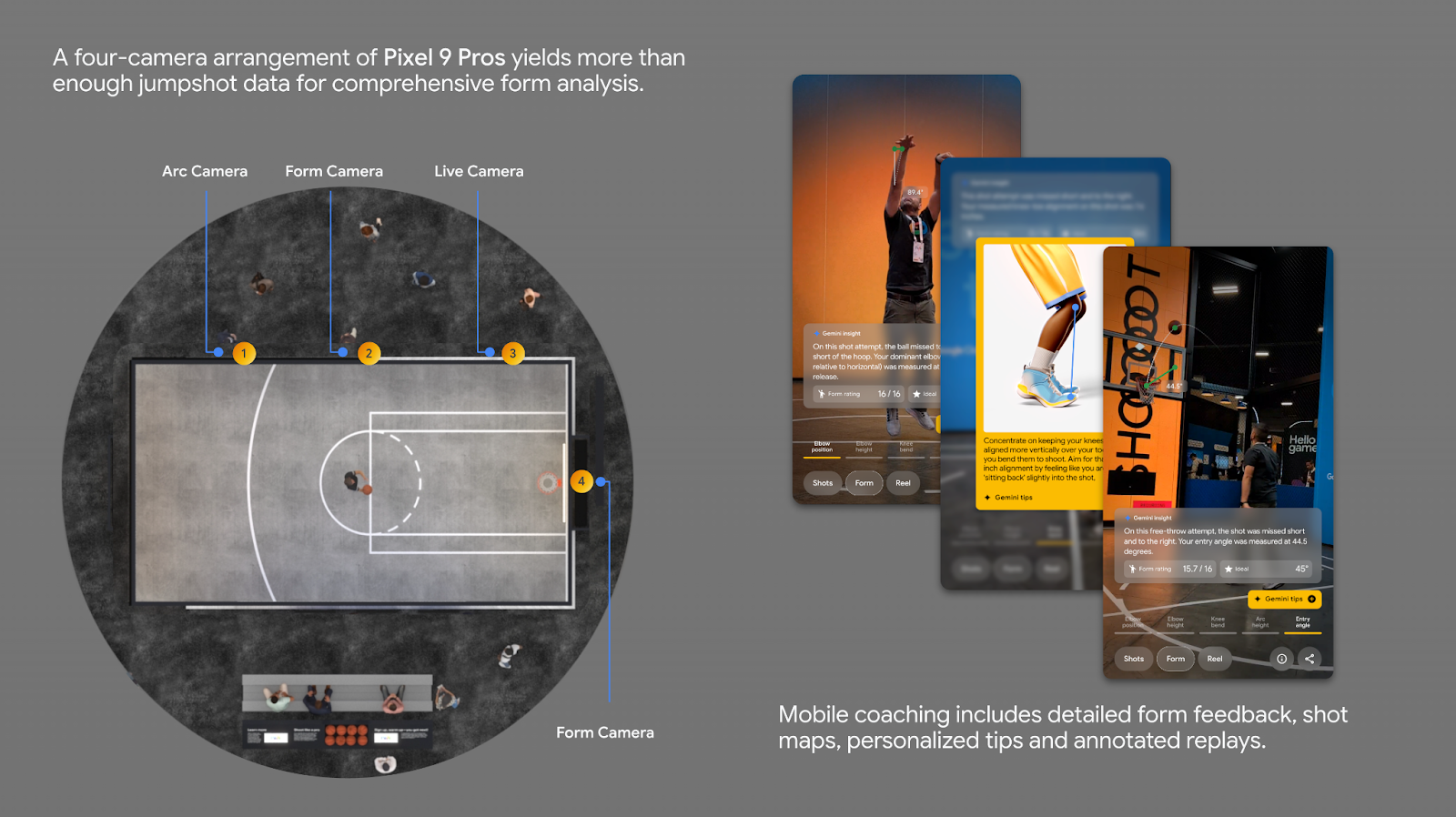

To address the first lesson, we had to make sure the coach could actually see what the participants were doing. They needed 360 views, so we set up about half a dozen Pixel 9 Pros around the court in 1080p video. This way, a person’s form, ball flight, and court position were visible at the same time.

Once we had the cameras set up, we made sure each phone ran MediaPipe locally. MediaPipe is Google’s open-source suite of ML libraries for computer vision. It offers on-device models for real-time video inference — skeletal-landmark detection, hand tracking, object detection, face tracking, etc — enabling low-latency, modular vision pipelines. That combination of performance and ease of integration made it a natural fit for our demo.

This brings us to lesson two – “train” your coach on accurate, domain-specific data.

It’s like we always say: AI is only as good as the information you give it. For the AI basketball coach to be accurate, we knew we had to talk to actual, real-life professionals. So we talked to our partners at the Golden State Warriors and came up with these six essential criteria for helping you shoot like the pros:

- Elbow position: The system assessed the vertical alignment of the dominant shooting hand’s forearm (the elbow-to-wrist axis) at the moment of release – crucial for accuracy and power.

- Release height: The vertical position of the elbow relative to the player’s eyes at release was measured, as this impacts shot trajectory and the ability to shoot over defenders.

- Knee-to-toe alignment: It analyzed lower body alignment and load posture when the player was in their crouch and beginning their upward shooting motion, key for balance and transferring energy efficiently.

- Arc height: The system tracked the maximum height of the ball's flight path, a critical factor for consistent shooting, as an ideal arc provides a greater margin for error at the rim.

- Entry angle: The angle at which the ball entered (or would have entered) the basket was calculated, another component influencing shot success.

- Player location: Gemini mapped the player’s position on the court against professional shot charts to contextualize the difficulty of each attempt.

With the right inputs in place, the player takes their shot and Gemini 2.5 Pro goes to work. It scans the video and timestamps four critical events—stance, crouch, release and landing—capturing overall form and the ball trajectory. Those markers drive our biomechanical calculations—elbow alignment, release height, and knee-to-toe alignment—and the results are displayed to the user.

We run our analysis on Cloud Run, Google Cloud’s serverless platform, so it scales automatically with demand. Our code calls Gemini through the Vertex AI SDK, and every shot’s data is stored in Firestore—a NoSQL database that feeds the web UI with your results as soon as they’re ready. Our code calls Gemini through the Vertex AI SDK, and every shot’s data is stored in Firestore—a NoSQL database that feeds the web UI with your results as soon as they’re ready.

How video capture leads to Gemini's multimodal analysis

Finally, with shot form and shot outcome data in hand, Gemini Pro referenced our jumpshot fundamental guidelines (distilled into PDFs) and wrote insightful, plain-language advice. The model has been instructed to keep the tone constructive (not just polite). This way, the model sounded like a real coach. Its feedback appeared via both voice and text on a React web app dashboard UI alongside replay videos that had been annotated with the biomechanics data generated with MediaPipe.

How do you measure up against NBA and WNBA data?

We wanted to give the demo a bit of a competitive spirit, so our live leaderboard tracked performance of those who participated. A 15-footer from the baseline is nowhere near as easy as a lay-up—and our scoring needed to reflect that. We built a reference map from 450,000+ NBA and WNBA shots, imported into BigQuery (Google Cloud’s petabyte-scale data warehouse) and explored in Colab Enterprise notebooks hosted on Vertex AI’s platform. The data reveals the make-percentage that professional players achieve from every square foot of the court.

During the demo, the system grabs a participant’s X-Y coordinates on the court the instant you shoot, looks up the pro baseline for that spot, and assesses the difficulty of the shot (based on the pro make-percentage). Sink a shot that even the pros convert <40 % of the time and you earn extra credit. Brick an “easy” layup and the score drops accordingly.

Built for basketball—and adaptable far beyond

While AI Basketball Coach focused on jump shots, the underlying AI analytics pipeline applies to any domain where movement matters. With Vertex AI and Gemini, businesses can build intelligent motion analytics systems in a variety of domains, including:

- Sports coaching: Beyond basketball — golf, baseball, physical conditioning

- Physical therapy: Tracking range of motion and recovery progression

- Retail environments: Observing movement for behavior modeling and store layout

- Manufacturing: Monitoring posture, repetition, and efficiency

- Security: Analyzing live video for anomaly detection

Wherever there’s a need to derive intelligence from physical motion, Vertex AI on Google Cloud and Gemini can turn observation into insight.

Explore our AI Basketball Coach stack

Our AI Basketball Coach demo stack

- Pixel 9 Pro and on-device MediaPipe: Capture synchronized multi-angle video, extract 33 skeletal landmarks per frame, and track the ball.

- Cloud Storage: Upload and persist raw video, landmark and ball flight data.

- Vertex AI (Gemini 2.5 Pro): Prompt Gemini with video, images, biomechanical measurement data, PDFs and other grounding data sources to understand a player’s movements, pinpoint the exact moment when key events occur(stance, crouch, release, landing); and generate accurate, grounded and personalized coaching advice.

- Cloud Run: Run containerized services without managing any underlying infrastructure to compute form metrics (elbow position, ball arc height and entry angle).

- Firestore DB: Store player form metrics, difficulty labels, and coaching analysis for instant, queryable access across numerous clients including the React frontend UI where players view their analysis.

- BigQuery: Benchmark shot difficulty against a 450k–shot NBA/WNBA heat map.

- Gemini Live: Provide on-court commentary and personalized welcome messages per player as they warm up to take their shot.

Thanks to our partners at the Golden State Warriors, and the many engineering, product, and creative teams across Google Cloud who helped bring this experience to life.

Contributors:

Thomas Cummins, Blake Rutledge, Alok Pattani, Zack Akil, Fernando Lammoglia, Katherine Larson, Noel Tantinya, Noah Bassetti-Blum, Lauren Kehoe, Mei Rawlinson.